Cleaning up the website

After having published my Hugo/Asciidoc blog, I wondered:

-

Are there some tracking scripts on my pages?

-

Does my website make unecessary connections to external domains?

-

Is my website loading fast enough?

Some issues were easy to address, some were much more complicated to solve.

| This article is meant to evolve as I find more things to fix and better solutions to tackle those issues. |

Gathering data

First I wanted to list all the links existing on my front page. A quick startpage search had me land on this StackExchange post explaining how to do it with lynx. A good thing that I already have lynx installed on my computer. I tweaked the suggested solution to my needs:

lynx -listonly -nonumbers -dump https://gnoobix.net | grep -vE 'Visible links|Hidden links|^$' | sort | uniqwhich produced:

https://gnoobix.net/

https://gnoobix.net/en/

https://gnoobix.net/index.xml

https://gnoobix.net/posts/

https://gnoobix.net/posts/creer-un-blog-avec-asciidoctor-et-hugo/

https://gnoobix.net/posts/sauvegarde-yunohost-avec-restic/

https://gnoobix.net/posts/ubuntu-bionic-kvm-ip-failover-ovh/

https://toot.coupou.fr/@lionelOk no harm here, there are only links back to home.

The https://toot.coupou.fr is my Mastodon instance, which I host as well.

That’s a good start but I wanted to have a more visual tool and see effectively which connections were made when the front page was displayed.

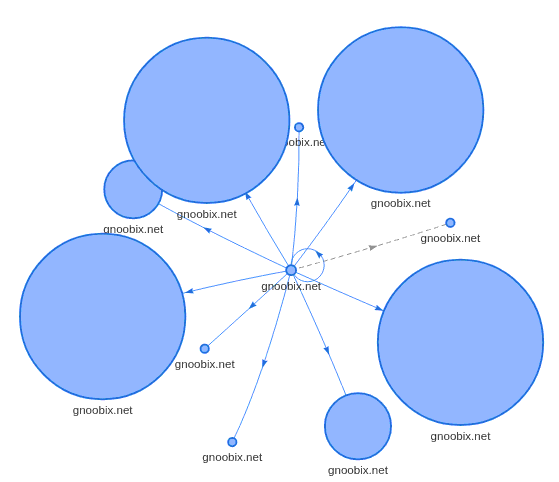

I had already seen around the web some images with lots of arrows and circles picturing connections made between websites when loading pages.

I did not know where to start, my first instinct was a Firefox addon, but I could not find any; I guess I didn’t search well enough.

So I searched on startpage and found https://requestmap.webperf.tools. My blog being public there was no harm passing its URL to this tool and that saved me the need to install one more addon on my browser.

Indeed, the outcome confirmed my first results:

There was still something bugging me, those four big discs. Next to this picture I saw a smaller disc representing the size of my front page: almost 4MB! Not so good.

Of course I used pictures and I never bothered checking their size before adding them to the site but it seemed impossible that they would weigh that much.

I used the Firefox developer tools and on the network tab I saw some pictures weighing more than 800KB, hence the four big discs. The header picture being quite big, this seemed okay-ish but I could not understand how the articles images could weigh as much. As those were scaled down I was naive enough to think that their size would be reduced, silly me, it turns out it is the original picture being loaded.

Let’s get to work!

I remembered an article of le Hollandais Volant I had read a long time ago, so I got back to it and tried to follow his advice.

I installed the required tools (jpegoptim, optipng and pngnq; I already had the GIMP installed). Then I processed all my images. I applied the GIMP posterize options to all of them and optipng and jpegoptim on their respective targets.

The end result: the page went down to 2,5MB. Not awesome but that’s a start, it was almost 40% lighter.

I will have to find how to prevent those reduced pictures from loading the original one if I want to drastically cut the page weight.